1.1 MEASUREMENTS

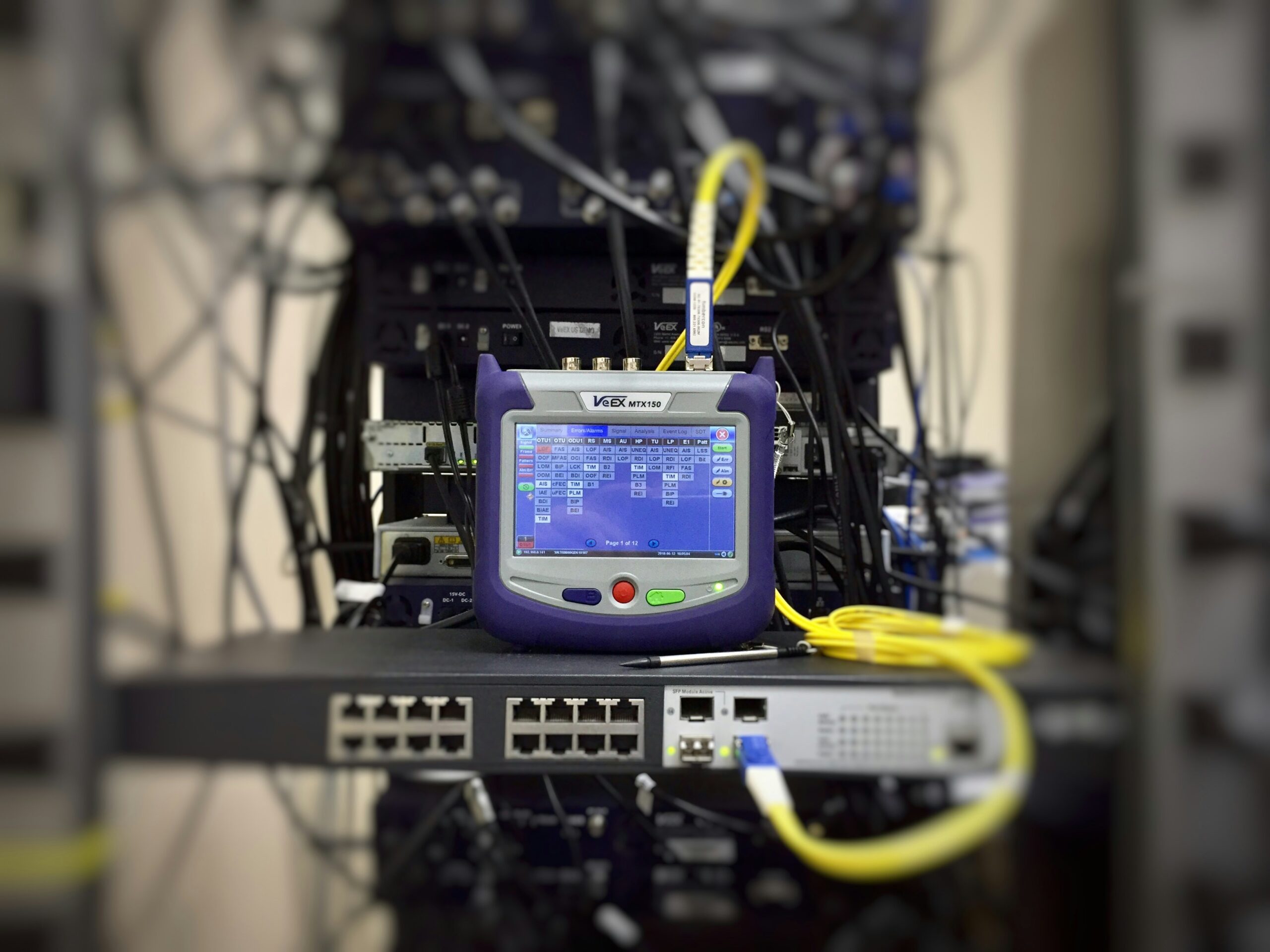

Measurement is essentially the act, or the result , of a quantitative comparison between a given quantity and quantity of the same kind chosen as a unit. The result of measurement is expressed by a number representing the ratio of the unknown quantity to the adopted unit of measurement. The physical embodiment of the unit of measurement as well as that of its submultiple or multiple value is called a standard. The device used for comparing the unknown quantity with the unit of measurement or a standard quantity is called a measuring instrument.

Measurement provides us with a means of describing a natural phenomena in quantitative terms. As a fundamental principle of science, Lord Kelvin stated “When you can measure what you are speaking about and express them an numbers, you know something about it and when you cannot measure it or where you cannot express in numbers, your knowledge is of a meagre and unsatisfactory kind. It may be the beginning of knowledge, but you have scarcely in your thought advanced to the stage of science”. In order to make constructive use of the quantitative information obtained from the experiment conducted, there must be a means of measuring and controlling the relevant properties precisely. The reliability of control is directly related to the reliability of measurement.

1.2 PERFORMANCE CHARACTERISTICS

A Knowledge of the performance characteristics of an Instrument is essential for selecting the most suitable instrument for specific measuring jobs. There are two basic performance characteristics viz static and dynamic characteristics.

1.2.1 Static Characteristics. The static characteristics of an instrument are, in general considered for devices which are employed to measure an unvarying process condition. All the static performance characteristics are obtained by one form or another of a process, called calibration. Several terms are frequently used to describe the static performance of an instrument. They are described below in brief.

- Instrument is a device or mechanism used for determining the value or magnitude of a quantity under measurement.

- Measurement is a process of determining the amount, degree, or capacity by comparison (direct or indirect) with the accepted standards of the system units being used.

- Accuracy refers to the degree of closeness of conformity to the true value of the quantity under measurement. The only time a measurement can be exactly correct is when it is a cont of a number of separate items, e.g., a number of components or a ,number of electrical pulses. In all other cases there will be a difference between the true value and the value the instrument indicates, records or controls to i.e., there is a measurements error.

- Precision is a measure of the consistency or repeatability of measurements i.e. successive reading do not differ. (precision is the consistency of the measurements output for a given value of input). It combines the uncertainty due to both random differences in results in as the deviation of mean value). It has no guarantee of accuracy.

- Sensitivity. The ratio of a change in output magnitude to the change in input which causes it after the steady-state has been reached is called the sensitivity.

The sensitivity will, therefore, be a constant in a linear instrument or element i.e. where equal change of the input signal cause equal changes of output.

Sensitivity is usually required to be high.

- Resolution. The least interval between two adjacent discrete details, which can be distinguished one from the other, is called the resolution. It may be expressed as an actual value or as a fraction or percentage of the full-scale value.

- Error. The algebraic difference between the indicated value and the true value of the measured signal is called the error.

i.e. Error = Indicated value – True value

- Expected value. The design value, i.e. the most probable value that calculations indicate one should expect to measure.

- Expected Value. The design within which the true value is estimated to lie.

- Threshold. If the input to instrument I very gradually increased from zero, there will be some minimum value below no output change can be observed or detected. This minimum value defines the threshold of the instrument. The phenomenon is specified by the first detectable output change which is noticeable or measurable.

1.2.2. Dynamic Characteristics. On application of an input to an instrument or measuring system, it cannot attain its final steady—state position instantaneously. The fact is that the measurement system passes through a transient state before it reaches its final steady –state position. Some measurement are carried out in such conditions that allow sufficient time for the instrument or measurement system to settle ti its final steady state position. Under such circumstances the study of behaviour of the system under transient state, know as transient, known as transient response is not of much importance; only steady-state response is to be considered. On the other hand, in many measurement system it become imperative to study the system response under both transient- as steady than its steady-state conditions. In many cases the transient response of the system is more important than its steady-state response. As we know that the instruments and measurement systems do not respond to the input immediately due to the presence of energy storage elements (such as electrical inductance and capacitance, mass fluid and thermal capacitance etc.) in the system. The system exhibits a characteristic sluggishness due to the presence of these elements.

In measurement systems having inputs dynamic in nature, the input varies from instant to instant, so does the output. The behavior of the system under such conditions is dealt by the dynamic response of the system, and its characteristic are given below in brief.

- Dynamic Error. It is the difference of true value of the quantity changing with time and the value indicated by the instrument provided static error is zero. Total dynamic error is the phase difference between input and output of the measurement system.

- Fidelity. It is the ability of the system to reproduce the output in the same from as the input. In the definition of fidelity time lag or phase difference is not included. Ideally a system should have 100% fidelity and the output appear in the same from as the input and there is no distortion produced by the system.